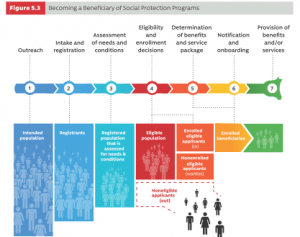

The World Bank’s Sourcebook on the foundations of social protection delivery systems is a substantial document, but it’s a bit of a curate’s egg: while some parts of it are excellent, others should be avoided. ‘Social protection’ mainly refers to benefits; it seems to take in social work – part of the same process only in some countries – but doesn’t apparently extend to medical care, and social care for older people is largely dismissed in a page (half on page 254, half on 264-5). The text is based around a seven-stage process of application and service delivery, shown in the graphic.

I wasn’t convinced at the outset that this was an ideal way to explain how the process of administering benefits and services was translated into practice; that’s partly because they’ve opted not to use or even refer to some well-established literature about claiming, and partly because the labels they use don’t quite capture what they intend to refer to. ‘Outreach’ is about how the intended population is identified, and becomes aware of services prior to claiming; ‘registration’ is mainly about basic documentation; ‘onboarding’ is induction. These stages aren’t necessarily sequential; many services apply eligibility criteria as a part of acquiring information about the population, and needs assessments are sometimes done to winnow out initial enrolment. (I think the point is made for me in chapters 7 and 8, which have to track backwards to get a view about information, contact, referral and verification.)

I wasn’t convinced at the outset that this was an ideal way to explain how the process of administering benefits and services was translated into practice; that’s partly because they’ve opted not to use or even refer to some well-established literature about claiming, and partly because the labels they use don’t quite capture what they intend to refer to. ‘Outreach’ is about how the intended population is identified, and becomes aware of services prior to claiming; ‘registration’ is mainly about basic documentation; ‘onboarding’ is induction. These stages aren’t necessarily sequential; many services apply eligibility criteria as a part of acquiring information about the population, and needs assessments are sometimes done to winnow out initial enrolment. (I think the point is made for me in chapters 7 and 8, which have to track backwards to get a view about information, contact, referral and verification.)

Despite those reservations, I warmed to the model as the book went on, because it does at least give shape and structure to discussion of the issues. It may be particularly useful for some of the advocates of Basic Income to consider: any viable Basic Income scheme still has to negotiate issues relating to documentation, identity, addresses, banking, how updates and corrections are made, and such like. This is the first document I’ve seen in an age which engages with that kind of issue.

There are, however, some problems with the way that the questions are explored, and arguably they reflect the agenda that the authors are implicitly following, much of which assumes that shiny new IT contracts and commissioning are the way to go. I suspect that some readers will have strong reservations about the criteria the authors of this report set for schemes for disability assessment, which need to be ‘valid, reliable, transparent and standardized’ (p 107) rather than being, if it’s not too wild a leap of the imagination, personal, dignified, expert or sensitive to complexities. The report promotes a sizeable range of approaches using digital tech, but the detailed coverage is fairly casual about many of the familiar problems that relate to reliance on such approaches – the obstacles the technology presents to claimants, the difficulty of determining whether their personal circumstances fit the boxes that people are offered, the role of the officials administering the system (most are not ‘caseworkers’ – a bureaucratic division of labour is more common), and the role of intermediaries. It doesn’t consider, with the main exception of enforcing conditionality, the possibility of using existing institutions such as schools and hospitals as the base for service delivery.

There are eccentricities in the way that benefits are described – the bland acceptance of proxy means testing, for example, the idea that a tapered minimum income is a ‘universal’ policy, and the treatment of grievances as a ‘confidential’ issue, when systematic reporting and review of complaints is essential to public management and scrutiny. Taking the UK as an exemplar for the recording of fraud and error is a bit rich, when the accounts have had to be qualified for years because of it. The entry that grated most, however, was about a ‘predictive tool’ for child protection, in Box 4.10. It claims that they have an instrument that can predict future out-of-home placements “accurately” and “to a high degree”.

Specifically, for children with a predicted score of 1 (predicted low risk), 1 in 100 were later placed out-of-home within two years of the call. For children with a predicted score of 20 (predicted highest risk), 1 in 2 were later removed from the home within 2 years of the call.

Calling this ‘accurate’ is overstating the case somewhat: if 1 child in 2 is going to be removed from the home, the other 1 child in 2 isn’t. I tried to dig for more information, but couldn’t find it – the source the report cites for this study isn’t public. From a previous published paper on the same project, it seems that the account given here is a misinterpretation anyway. The purpose of the scheme was not to predict whether the child ought to be removed to a place of safety, but to stop the people who are answering the telephone hotline from dismissing calls about child abuse that might otherwise seem innocuous. If similar low-level calls were being made repeatedly, they might all be dismissed in the same way. This looks more like a problem in logging and managing referrals than it is a problem with casework judgments. But for what it’s worth, predictive tools based on profiling referrals have major limitations when it comes to managing benefit claims, too. The problem is that there are too many variations and complexities for generalisations about claimants to work at the level of the individual.